How to cache contentful to google storage bucket

Recently our email

- need support custom for differernt partner

- need adopt new master email template

So we can create new email template by contentful(CMS), but also have some advantages and disadvantages:

Pros:

No need FE developer to modify text or image in code.

Repo code will be much cleaner.

All email template will adopt the one style.Cons:

If the contentful server down, and we connect the api with contentful directly, then our email service will down, so we need cache the contentful email template data in some place. which is google bucket storage.

So then we need to resolve the contentful server problem, then we need cache the data from contentful, we find two solutions to cache the data.

- Contentful Webhook call Google Cloud function to generate template data files and upload to bucket.

- Contentful Webhook trigger pipeline to generate template data files and upload to bucket.

Google Cloud Funtion

In google cloud function, if u want to generate temp files , u need to use

/tmpfolder or will not work, maybe appear no access to write files.If u want local fetch bucket files from gcp, u need access , appear this error :

Could not load the default credentials. Browse to https://cloud.google.com/docs/authentication/getting-started for more information., you need run command gcloud auth application-default login, if u don’t install gloud , pls install this sdk first https://cloud.google.com/sdk/install.In some place maybe u will meet cloud function

does not have storage.objects.create access to the Google Cloud Storage object., u need add this service account to storage bucket admin in IAM.

The gcloud access token expire time is 1h, cause contentful no provide a function to get gcloud access token dynamic. If we open the public invoke, then we will maybe receive DDOS attack.

So we change to gitlab pipeline to response contentful webhook.

- Pros: Support access token trigger.

- Cons: trigger pipeline cost much time than google cloud function.

Gitlab pipeline setting

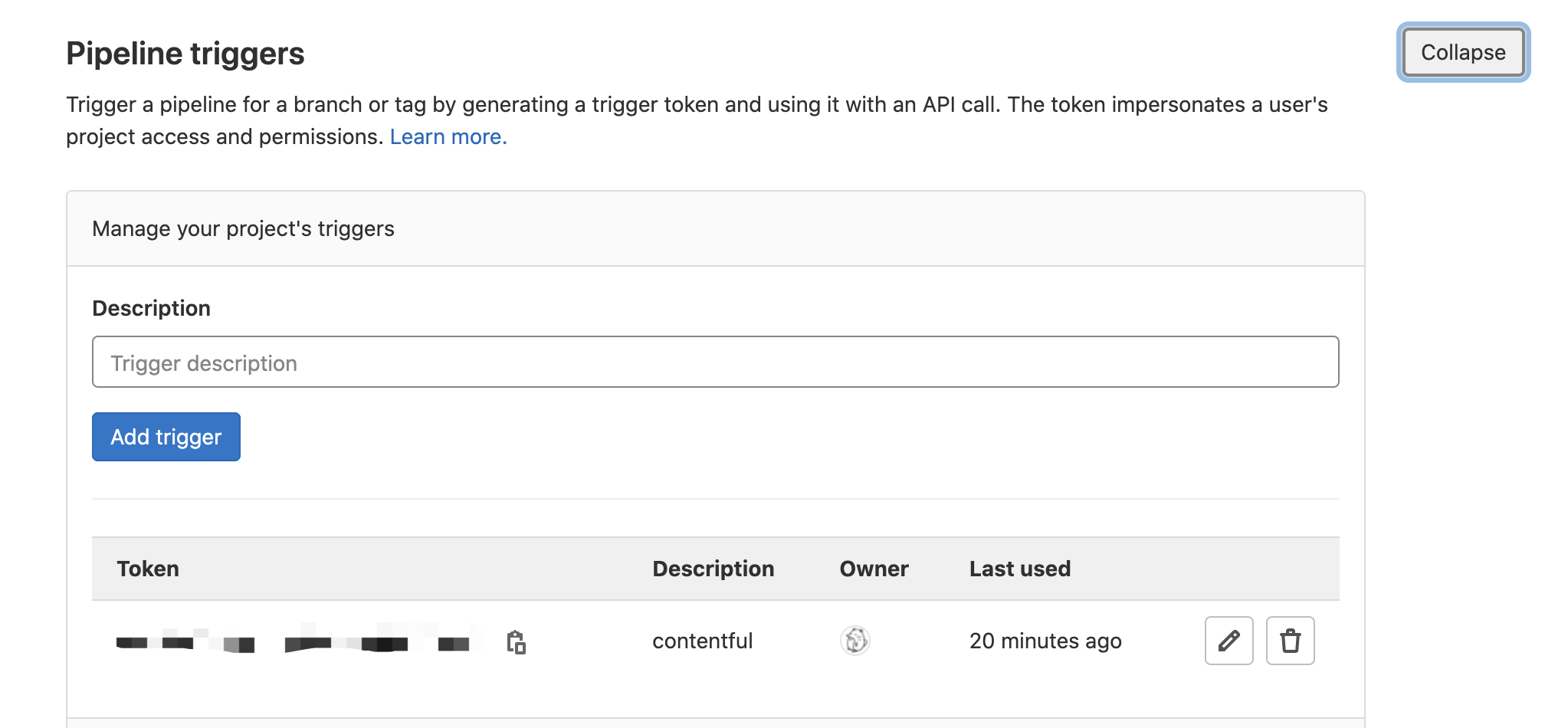

1,need to generate a trigger token, in gitlab setttings -> CI/CD -> Pipeline triggers

2, Need copy this token to contentful webhook request body. like below

3, If we want to trigger for different env, we need to add a variable like TRIGGER_EVENT: "AUTO_SAVE || PUBLISHED", then in the gitlab-ci.yml file, we can trigger stage conditional on TRIGGER_EVENT.

4, Then trigger pipeline like below.

本博客所有文章除特别声明外,均采用 CC BY-SA 4.0 协议 ,转载请注明出处!